Business

Key factors for effective security automation

Published

2 years agoon

By

GFiuui45fg

Harnessing the potential of automation in cybersecurity is key to maintaining a robust defense against ever-evolving threats. Still, this approach comes with its own unique challenges.

In this Help Net Security interview, Oliver Rochford, Chief Futurist at Tenzir, discusses how automation can be strategically integrated with human expertise, the challenges in ensuring data integrity, and the considerations when automating advanced tasks.

Because human intelligence is still crucial in cybersecurity, how can automation be used strategically alongside human judgment and expertise to identify threats and analyze patterns more effectively?

Ideally, we want to automate as much of the menial and repetitive work as possible to free up scarce and stretched cybersecurity experts to focus on high-value tasks. Human cognition is irreplaceable in behavioral analysis requiring context or institutional knowledge or for determining the correct course of action when a situation is not easily categorized.

Practically this might mean automating subtasks instead of fully automating entire processes, such as correlating or enriching event data, fetching additional forensic evidence, or opening and annotating incident tickets. What’s key is designing ergonomic workflows where automation is embedded in such a way as to help security analysts and threat hunters to focus on analysis and decision-making, while reducing their cognitive workload and avoiding context switching. It’s about using the strengths of each – being able to parse, process, correlate and analyze large amounts of data in the case of machines and making sense of it in the case of humans – that makes the sum of human-machine teaming greater than the parts.

But a more aggressive automation strategy is increasingly needed to fend off machine-speed attacks or when detecting attacks in the latter stages of the kill chain, for example, data exfiltration, where a manual process may not act rapidly enough. Threat actors are also actively adopting automation. Speed will matter more and more.

What key elements are needed to ensure the data underpinning security automation can be trusted?

If we for example want to automate threat response and containment, we can only tolerate a very small amount of false positives. First thing, we must ensure that we are collecting the right data sources, and that our data collection is reliable and consistent. We will then also need a way to determine when to initiate a response via detection or correlation rules, or more commonly today using something like a machine learning model. Accurate detections may require additional data sources, for example machine-readable threat intelligence feeds, or asset and user role context.

All of this data has to be available in the right format and on time when needed. We will also need further decision-making logic to orchestrate the automated response process, like a response playbook in a SOAR solution. Every step of this multisequence process requires a high level of data quality, integrity and reliability. Especially when automating something, the adage ‘bad data in, bad data out”, takes on a more immediate meaning. Automating almost anything, even seemingly simple processes, often results in complex playbooks, with many single points of failure, especially for data.

What’s also becoming increasingly important, especially when it comes to building trust in automation, are model interpretability and explainability. Being able to trust the data that is used to drive automations is crucial. It’s hard to trust a black box, especially when false positives are by necessity still common.

Why are security leaders comfortable automating basic tasks but have reservations about more advanced tasks? What differentiates these two categories?

I think it’s hard to generalize because appetite for automation and tolerance for associated risks is dependent on many factors, including past experience, competitive and peer behavior, business pressures, and technical maturity and capabilities. More generally though, how an organization as a whole approaches automation is an important factor. Security teams struggle to lead culture change without executive sponsorship.

A few factors generally drive the willingness to automate security. One factor is if the risk of not automating exceeds the risk of an automation going wrong: If you conduct business in a high-risk environment, the potential for damage when not automating can be higher than the risk of triggering an automated response based on a false positive. Financial fraud is a good example, where banks routinely automatically block transactions they find to be suspicious, because a manual process would be too slow.

Another factor is when the damage potential of an automation going wrong is low. For example, there is no potential damage when trying to fetch a non-existent file from a remote system for forensic analysis.

But what really matters most is how reliable automation is. For example, many threats actors today use living-off-the-land techniques, such as using common and benign system utilities like PowerShell. From a detection perspective, there are no uniquely identifiable characteristics like a file hash, or a malicious binary to inspect in a sandbox. What matters instead is who is using the utility. The attacker has probably also stolen admin credentials, so even that’s not enough to accurately detect malicious behavior.

What also matters is how powershell is being used. That however necessitates deep knowledge about what other users typically do, which assets they usually work on, and so on. That’s why you generally see unsupervised machine learning being applied to those sorts of problems, as it helps to detect anomalies rather than needing prior knowledge of indicators of compromise. But it also means that false positives are common. That’s the trade-off.

So what it really boils down to is the reliability and confidence in the decision making logic. Sadly, there also aren’t many hard rules either. How hard it is to detect something doesn’t always relate to the amount of risk a threat represents.

Then you also have to consider that we are facing actual adversaries who frequently change how they attack and who also respond evasively to our defenses. Detection, and automating analysis more generally, are hard problems that we’ve only partially solved. Even LLM’s won’t be able to entirely help.

What potential risks or pitfalls do you think organizations perceive when considering the automation of more complex security processes?

From a technical perspective, and conditioned through years of negative experiences with failed historical experiments like anti spam filters and active intrusion prevention systems, the amount and ratio of false negatives and false positives are perceived as critical. The ROI from automation declines rapidly if you end up spending any time you’ve saved doing root cause analysis for false detections and tuning and optimizing automation logic and rules. This is further compounded by the fact that especially more complex security automations now rely on machine learning or similar data analytics techniques, which can suffer from a lack of interpretability and explainability.

It is possible to focus selectively only on automations that are highly accurate and reliable, but as accuracy is highly variable independent of threat type of risk, will leave you maintaining a very mixed set of automations and gaps in coverage. That’s why selecting the right technologies for an automation-first approach are crucial as well. Security teams that want to automate heavily will be best advised to select technologies that bake automation capabilities in and lend themselves to increasing automatability.

Many cybersecurity teams seem to be overstretched, taking on various responsibilities. How do you see automation helping to alleviate this issue without compromising security?

We’ve experimented with automation since the inception of hacking and cybersecurity, from the very first port scanner that automated the process of telnetting to TCP/IP ports to see what’s running on them, right through to today and detection-as-code. But we have been hampered by a lack of data and compute, and most automation capabilities have either been applied in a haphazard manner or bolted on. Take SOAR for example. It makes as much sense to bolt automation onto everything, as it does to separate your head from your body. While it automates some processes, it doesn’t do it where the data is or where the work is done, and it relies on play books which have to be manually created and maintained. Beyond the dozen or two most common playbooks, creating any more probably costs more time than it saves. We need to switch an automation-first mindset, and we need automation capabilities to be included at every level in solutions, and also to enable automation between solutions.

We also need to learn how to maximize the potential of human-machine teaming, and how to develop workflows that combine the advantages of automation and a human analyst. This means automating tasks such as context and intelligence enrichment, mapping events to threat models, prefetching forensic data, or building data visualizations, everything to get the data into the right shape for a human to make sense of it and decide what’s next.

We have some basic learnings to do as well, for example how we can use human-in-the-loop and human-on-the-loop processes to make automation more effective and safe.

Finally, if you were to advise organizations looking to leverage security automation, what should their primary considerations be? What should they avoid or pay particular attention to?

I’d say first and foremost, be strategic. Cultivating an automation mindset takes time and verifiable successes. Begin with low-hangin fruit by automating routine and repetitive tasks to free up cybersecurity experts to concentrate on high-value activities. This will also give you metrics to build the business cases to justify further automation and help convince the skeptics.

Start thinking ergonomically about how integrating automation into workflows can support security analysts and threat hunters to allow them to focus on analysis and decision-making while minimizing cognitive workload and context switching.

In the case of automating containment, focus on threats that require rapid response where manual response may not act rapidly enough, like machine-speed attacks and threats detected in the later stages of the kill chain.

If you’re using machine learning or similar approaches to automate, seek solutions with good interpretability and explainability to better understand the decision-making process and build trust in the results. Automation adoption is dependent on trust. If a user experiences a few false alerts, they will begin doubting every result in the future.

Generally, choose automation technologies that embed automation capabilities and lend themselves to increasing automatability as you become more confident and mature. Try to maximize the potential of human-machine teaming by combining the strengths of automation and human analysts in well-defined workflows, utilizing human-in-the-loop and human-on-the-loop processes.

Lastly, embrace a learning mindset, and continuously measure and refine automation processes. In fact, automate that.

Source: https://www.helpnetsecurity.com/2023/07/27/oliver-rochford-tenzir-security-teams-automation/

You may like

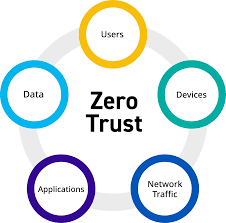

IoT security threats highlight the need for zero trust principles

New infosec products of the week: October 27, 2023

Raven: Open-source CI/CD pipeline security scanner

Apple news: iLeakage attack, MAC address leakage bug

Hackers earn over $1 million for 58 zero-days at Pwn2Own Toronto